Photo by Carlos Muza on Unsplash

Master THE FIVE SORTING ALGORITHMS in 5 Minutes A Day

-Learn All the 5 Sorting Algorithms, With complete code.

Sorting: What makes it interesting?

▸Sorting is the process of grouping in an increasing or decreasing order based on a linear connection between the data pieces.

An essential key to algorithm design is to use sorting as a fundamental building block because once a set of items is sorted, many other problems become manageable. Let's see some real-world applications.

✔Common Applications of sorting:

🔹Searching 🤔

Binary Search lets you test whether an item is in a dictionary in O(log n) time, once the keys are sorted. Search pre-processing is perhaps the single most important application of sorting.

🔹Element uniqueness 🤔

Are there any duplicates in, given a set of n items? The most efficient algorithm is to sort them and then do a linear scan through them checking all adjacent pairs.

🔹Closest pair🤔

Given a set of n numbers, how do you find the pair of numbers that have the smallest difference between them? After the numbers are sorted, the closest pair of numbers lie next to each other in sorted order.

And a lot of others like: 🔹Frequency distribution 🔹Convex hulls

🥳 Ok Let's dive into the big 5

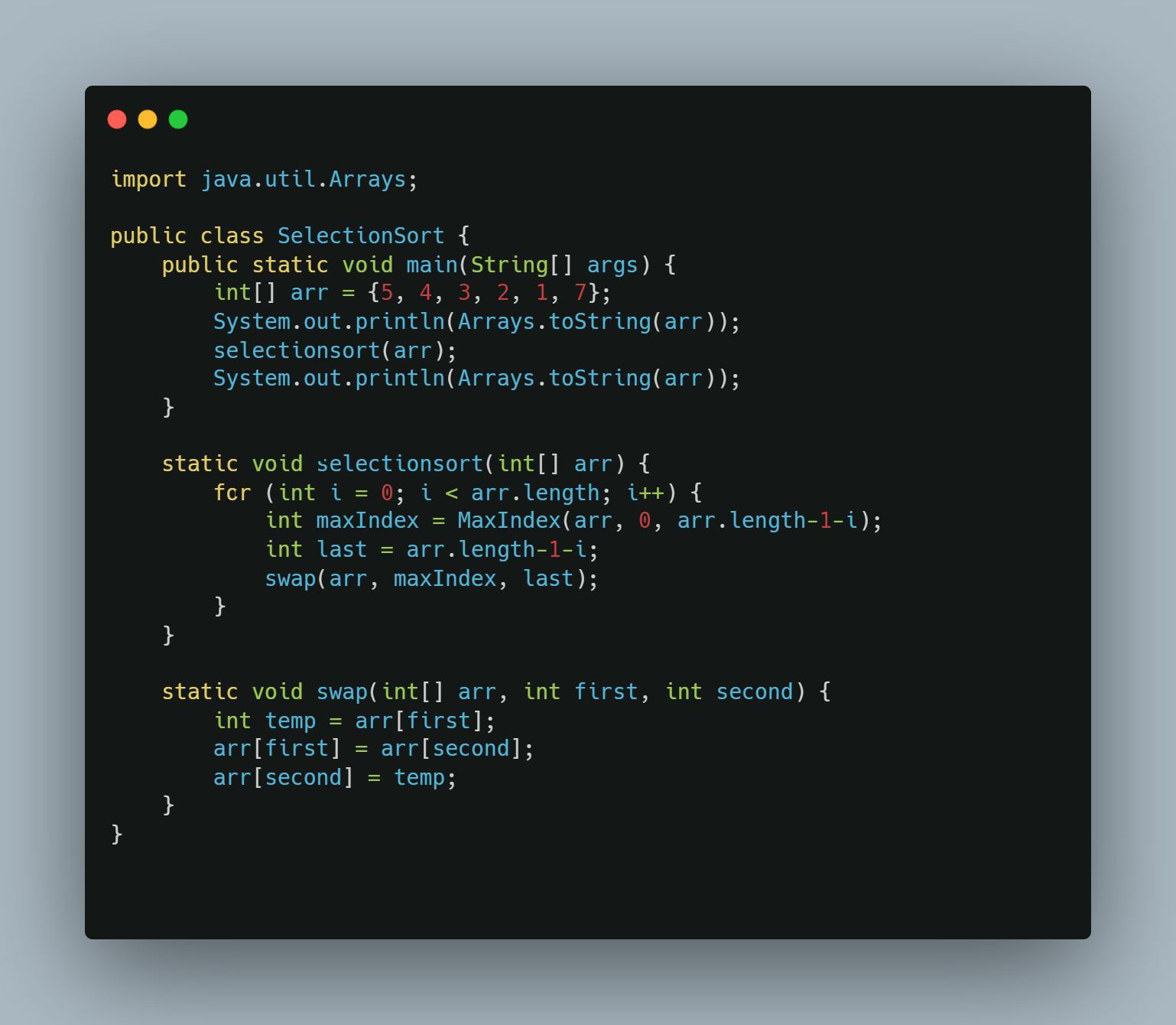

🟢Selection Sort

In our list, there is one exception: selection sort. This is referred to as an intellectual sorting algorithm. Why? Because the time efficiency is always O(n2), which is insufficient. Except for passing the data structure course test, there is no real-world use for selection sort.

🔸pros: Nothing positive😅

🔸cons: Even in the best-case scenario, always run at O(n2).

🔸Practical usage:

Nothing specific, but assist students in obtaining some credits toward their degree.

🟢 Bubble Sort

This is the second exception because bubble sort is too slow to be useful. The practicality of bubble sort is 0 unless the sequence is almost sorted, and the running time is O(n2). This is one of three basic sorting algorithms, along with selection sort and insertion sort, however, it falls short of insertion sort in terms of performance even for tiny sequences, as does selection sort.

🔸pros: Nothing else, perhaps just "a catchy name😅"

🔸cons: It is too slow with polynomial O(n2).

🔸Usage in practice: Implementing it makes for an interesting programming exercise

🟢Insertion sort

Insertion sort is not the most efficient algorithm available, but its strength resides in its simplicity. It is suitable for tiny or elementary applications since it is simple to develop and sufficiently efficient for a limited number of items. The definition of tiny is ambiguous and varies on many factors, although insertion sort is quick enough if the number is less than 50. When the sequence is virtually sorted, insertion sort comes in handy again. Such sequences may appear to be outliers, yet in real-world applications, virtually sorted items are frequently seen. In the worst-case situation, the execution time of insertion sort is O(n2). So far, we have another worthless selection sort alternative.

But if implemented well the run time can be reduced to O(n+k). n is the number of elements and k is the number of inversions (the number of pairs of elements out of order). With this new run time in mind, you can see if the sequence is almost sorted (k is small) the run time can be almost linear which is a huge improvement over the polynomial n^2.

🔸pros : Easy to implement. The more the sequence is ordered the closer is run time to linear time O(n)

🔸cons : Not suitable for large data sets. Still polynomial complexity at worst case

🔸Practical usage

For small applications when the sequence is small (less than 50 elements)

When the sequence is going to be almost sorted.

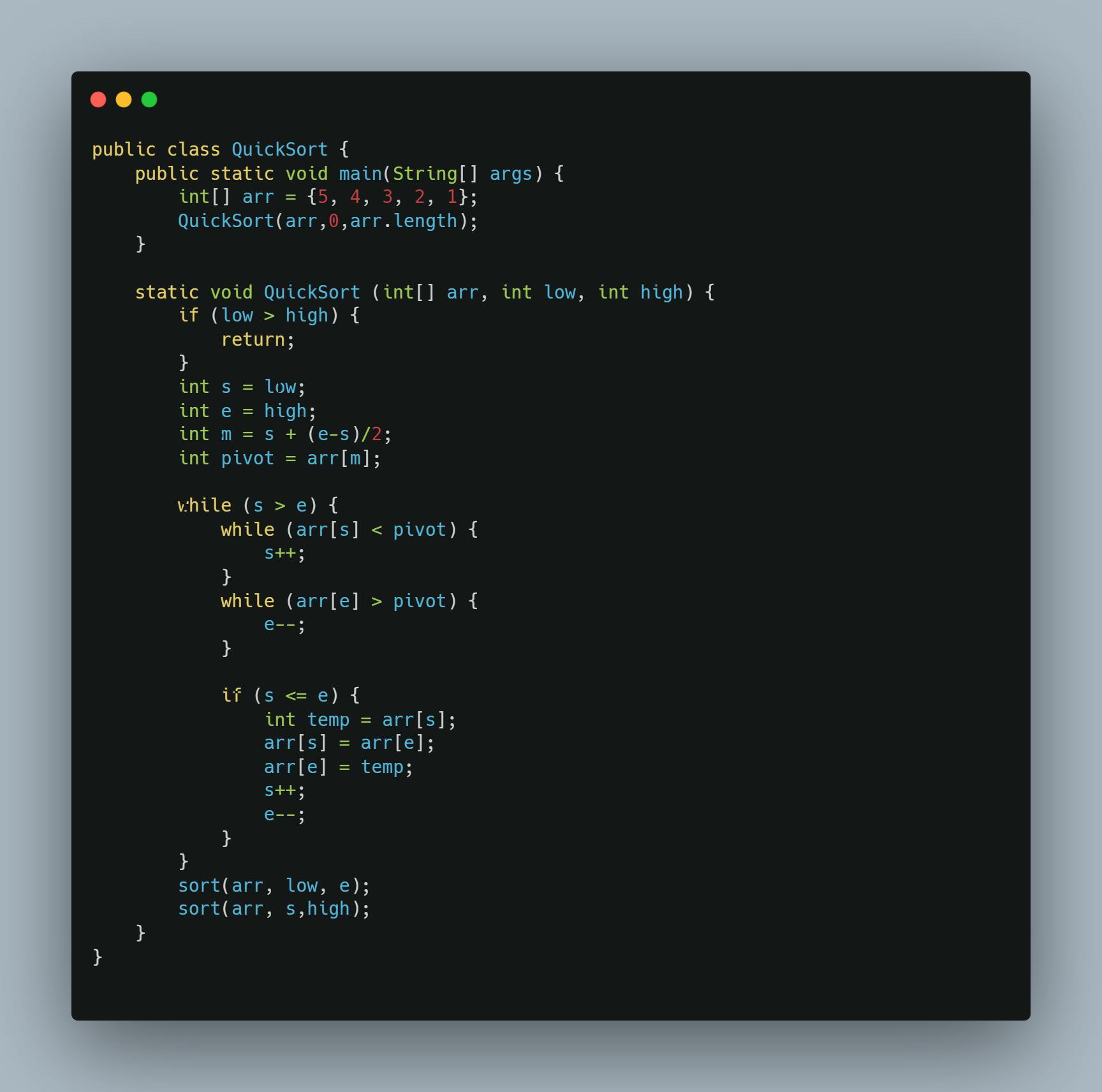

🟢Quick Sort

Insertion sort is not the most efficient algorithm available, but its strength resides in its simplicity. It is suitable for tiny or elementary applications since it is simple to develop and sufficiently efficient for a limited number of items. The definition of tiny is ambiguous and varies on many factors, although insertion sort is quick enough if the number is less than 50. When the sequence is virtually sorted, insertion sort comes in handy again. Such sequences may appear to be outliers, yet in real-world applications, virtually sorted items are frequently seen. In the worst-case situation, the execution time of insertion sort is O(n2). So far, we have another worthless selection sort alternative.

🔸pros:

‣Most often than not runs at O(n*logn).

‣Quick sort is tried and true and has been used for many years in the industry so you can be assured it is not going to fail you.

‣High space efficiency by executing in place.

🔸cons:

‣The polynomial worst-case scenario makes it susceptible to time-critical applications.

‣Provides non-stable sort due to swapping of elements in the partitioning step.

🔸Practical usage:

‣Best choice for general purposes and in-memory sorting.

‣Used to be the standard algorithm for sorting arrays of primitive types in Java.

‣Quick-Sort utility in C programming language is powered by quick sort.

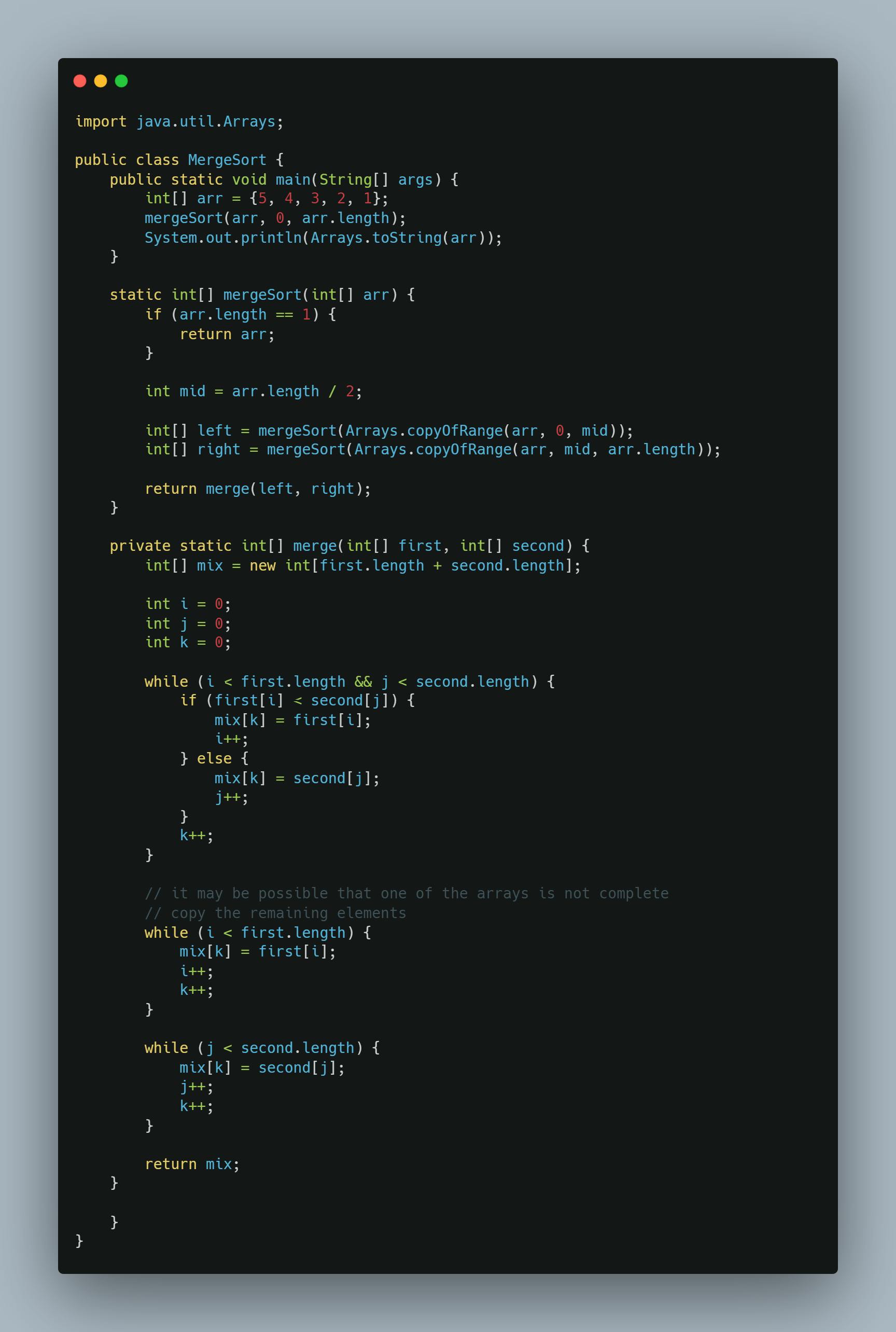

🟢Merge sort

Merge sort is a strong sorting algorithm due to its O(N log N) worst-case scenario run time. The biggest disadvantage of this method is its inefficiency in terms of space. That is, throughout the sorting process, numerous temporary arrays must be constructed, and many elements must be copied. This is not to say that merge sort isn't beneficial. When the data to be sorted is spread over many places such as cache, main memory, and so on, data copying is unavoidable. Merge sort gained prominence mostly due to Tim Peters, who created Tim sort, which is essentially a bottom-up merge sort.

🔸pros:

‣When data must be retrieved from sources other than main memory, this is an excellent alternative.

‣Having an ideal worst-case scenario run time of O(N log N), the Tim sort version is really effective.

🔸cons:

‣There is a lot of overhead in transferring data across arrays and creating new arrays.

‣It's really tough to put it in place for arrays.

‣Inefficient use of space

🔸Usage in practice:

‣When data is stored in many locations such as cache, main memory, external memory, and so on.

‣The GNU sorting tool employs a multi-way merge sort variation.

‣Since 2003, the Tim sort variation has been the primary sorting algorithm in the Python programming language.

‣Since Java version 7, this has been the default sorting method for arrays of an object type.

🔵 Special purpose Sorting Algorithms

Though O(N log N) appears to be an unbreakable cap for sorting algorithms at the moment, this only applies to general-purpose sorts. If the entities to be sorted are integers, texts, or d-tuples, the sorting techniques described above are not applicable. Two of the most well-known special-purpose sorting algorithms are Radix sort and Bucket sort. Their worst-case run time is O(f(n+r)). The range of integers is [0, r-1], and f=1 for bucket sort. Overall, if f(n+r) is much less than the N logN function, then these approaches outperform three strong general-purpose sorting algorithms: merge sort, rapid sort, and heap sort.

🔸pros: They can run faster than N logN

🔸cons:

‣Cannot be used for every type of data

‣Not necessarily always run faster than general-purpose algorithms

🔸Practical usage:

‣When the prerequisites of data types are met then they are the definitive choice.

Thank You Soo Much for your valuable time.😊🥳👋

➲Mithin Dev

I would love to connect and collaborate.

🔹GitHub: https://github.com/mithindev

🔹Twitter: https://twitter.com/MithinDev

🔹LinkedIn: https://www.linkedin.com/in/mithin-dev-a-397983247/

#Letsopensource #webdevelopment #cloud #devops #quality #socialmedia #linkedin #love #content #productivity #developerlife #developercommunity #developpementweb